OpenAI is a visionary organization dedicated to researching and advancing the field of artificial intelligence. Comprised of both a for-profit technology company (OpenAI LP) and a non-profit parent company (OpenAI Inc.), OpenAI was founded in 2015 with the noble aim of creating and promoting AI that benefits humanity. With a focus on responsible and safe development, OpenAI strives to make cutting-edge AI technology accessible to people everywhere, with the ultimate goal of making the world a better place for all.

OpenAI created the state-of-the-art language model known as GPT-3 or Generative Pretrained Transformer 3. It is the newest and biggest language model created by OpenAI and it is regarded as one of the most sophisticated ones to date. GPT-3 has a gigantic size of over 175 billion parameters, which enables it to generate text that is similar to what a human would produce, translate languages, summarise lengthy documents, provide answers to inquiries and carry out a variety of other language-related activities with high accuracy.

GPT-3 marks a major advancement in AI language modeling and has garnered significant interest from scientists, corporations and the general public alike. This blog post delves deeper into GPT-3’s capabilities, covering its training, use cases, strengths and drawbacks.

What is GPT-3?

Explanation of GPT-3 and its significance in the field of AI:

OpenAI created the GPT-3 language model, which uses deep learning methods to produce text that resembles that of a human. It is built on the Transformer architecture, which is now the de facto norm for the majority of contemporary language models. The “3” in its name designates the third Generative Pretrained Transformer in the OpenAI family, which also includes GPT-1 and GPT-2.

GPT-3 is one of the most sophisticated language models to date and it is crucial in the field of AI since it can produce text that is both coherent and meaningful. In terms of how well it performs on various language related tasks and how well it can comprehend and produce human language, it has outperformed earlier language models.

Comparison with previous language models (GPT-1 and GPT-2):

GPT-3 represents a leap forward from its earlier versions, GPT-1 and GPT-2. GPT-1 was a basic language model and GPT-2, though more advanced with its ability to produce text resembling human writing, was limited in its capabilities and had a smaller training dataset in comparison to GPT-3.

Key features and capabilities of GPT-3:

GPT-3 has several key features and capabilities that set it apart from previous language models. These include:

- Large-scale training data: GPT-3 was trained on a massive dataset, which allowed it to learn and understand a wide range of topics and language styles.

- Advanced architecture: The Transformer architecture used in GPT-3 is designed to handle large amounts of data and process it in an efficient manner.

- Human-like text generation: GPT-3 can generate human-like text, making it a valuable tool for content creation and other language-related tasks.

- Ability to perform multiple language-related tasks: GPT-3 can answer questions, summarize articles, translate languages, and perform other language-related tasks, making it a versatile tool.

- Improved performance: GPT-3 outperforms previous language models in terms of its performance on various language-related tasks.

Training process of GPT-3

Data sources used for training GPT-3:

GPT-3 was trained on an enormous dataset that encompassed a range of text sources like websites, books and other written material. The training data was curated from a diverse array of topics and language styles, allowing GPT-3 to learn and comprehend a broad spectrum of information.

Explanation of the language model architecture:

The Transformer design, which has proven to be the de facto norm for most contemporary language models, serves as the foundation for the architecture utilised in GPT-3. Large volumes of data can be handled and processed effectively with the Transformer architecture. In order to process the input data and produce the output, it comprises a number of feed-forward and self-attention layers that work in concert.

How the training process helps GPT-3 to perform tasks effectively:

The training enables GPT-3 to learn and comprehend a wide range of information by exposing it to a large volume of text material, giving it the ability to carry out tasks efficiently. The model develops the ability to write language that is relevant and cohesive through training. GPT-3 is capable of carrying out a variety of language-based tasks with outstanding accuracy thanks to the interaction between its architecture and training method.

GPT-3 is an asset in a variety of industries thanks to the training that enables it to understand and create human language. GPT-3 is capable of activities that closely resemble those performed by humans, such as answering questions, summarising documents and translating languages, after training on a large and diverse dataset. GPT-3 stands out as one of the most advanced language models currently available thanks to its sophisticated architecture and vast training data.

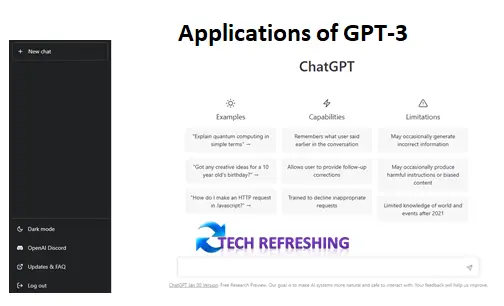

Applications of GPT-3

Use cases in various industries (e.g. customer service, content creation, etc.):

GPT-3 possesses diverse applications and can be employed in various sectors such as customer support and content creation. In customer support, it can automate answers to frequently asked questions, lightening the burden on human customer service agents. In content creation, GPT-3 can generate articles, summaries and other written material.

Examples of successful implementation of GPT-3:

GPT-3 has seen successful integration across multiple industries. OpenAI has collaborated with several organizations to build language based applications using GPT-3, such as chatbots for customer support and content generation tools for marketers, among others. Various independent developers and organizations have utilized GPT-3 to create new tools and applications.

Potential impact of GPT-3 on different industries:

The influence of GPT-3 on various industries is substantial and has the ability to revolutionize business operations. For instance, in the customer service sector, GPT-3 can notably lessen the workload for human customer service agents, freeing up time and resources for more intricate tasks. In the content creation sector, GPT-3 can be utilized to generate top-notch content much faster than a human could, enabling companies to produce more content in less time.

Overall, GPT-3 can be a useful tool for a variety of industries and has the potential to completely change how firms function. It is a potent tool for companies wishing to automate and streamline their processes due to its capacity to carry out a number of language-related tasks with great accuracy and efficiency.

Advantages and Limitations of GPT-3

Benefits of using GPT-3 technology:

There are several benefits of using GPT-3 technology, including:

- High accuracy: GPT-3 can produce excellent text with great accuracy thanks to its extensive training data. As a result, it works well for a range of language-related tasks.

- Ease of use: GPT-3 is a practical and user-friendly solution for organisations because it is easily integrated into a range of tools and applications.

- Time-saving: GPT-3 can perform many language-related tasks in a fraction of the time it would take a human, freeing up time and resources for more complex tasks.

- Cost-effective: GPT-3 can help organisations save money on labour costs by automating various language-related operations, making it a cost-effective alternative.

Challenges and limitations of GPT-3:

Despite its many benefits, there are also several challenges and limitations to using GPT-3, including:

- Bias: Although GPT-3 was trained on a sizable dataset, there may be biases present in the data that are visible in the model’s results. This is a worry for sectors like customer service where biases in chatbot language might have unfavourable outcomes.

- Data privacy: GPT-3 has access to a massive amount of text data and there are concerns about data privacy and security.

- Limitations in performing specific tasks: Despite the fact that GPT-3 is capable of a wide range of language-related tasks with high accuracy, there may still be some tasks that it is unable to complete, such as those that call for specialised knowledge or abilities.

GPT-3 is a powerful tool with many benefits, but it is important to consider the challenges and limitations when evaluating its use for a specific task or industry.

Conclusion

OpenAI’s GPT-3 technology is a sophisticated language model that represents a major advance in AI language modeling. With over 175 billion parameters, it can generate human-like text, translate languages, summarize documents, answer questions, and perform a range of other language-related tasks with high accuracy.

GPT-3 stands out among language models due to its large-scale training data, advanced Transformer architecture and improved performance. The training process of GPT-3 involves exposure to a vast and diverse dataset, allowing it to learn and comprehend a broad range of information.

GPT-3 has diverse applications in industries such as customer service, content creation, and more. Despite its capabilities, GPT-3 also has limitations and the development of AI should continue to be monitored and regulated to ensure it benefits humanity as a whole.

The future of GPT-3 and its impact on AI technology is exciting and holds much promise. As AI technology continues to advance, we can expect to see even more advanced and sophisticated language models, further transforming the way businesses operate and changing the world.